Our chief way of conceptualizing information processing is through computation, which is a linear process. While computational processes can connect or categorize information, the capacity and flexibility of computation is limited by the computer’s linear “word at a time” processing architecture. This limits its ability to access stored processing instructions and the stored data needed for processing simultaneously—a problem called the Von Neumann bottleneck. It may be that this bottleneck represents a functional and fundamental limitation of computation, unless we come up with a new way like quantum computing.

The von Neumann bottleneck, which occurs when data moves slower than computation due to separated memory and computing units connected by a bus, creates a significant efficiency problem for AI computing—particularly because the main energy expenditure during AI runtime is spent on data transfers bringing model weights back and forth from memory to compute (https://research.ibm.com/blog/why-von-neumann-architecture-is-impeding-the-power-of-ai-computing).

Data-centric computation and the scalability limits of current computing systems call for the development of alternatives to von Neumann architecture, with in-memory computing emerging as an energy efficient solution that could reduce computational complexity by performing both computational tasks and data storage in the same place (https://www.nature.com/articles/s41565-020-0738-x). Quantum computers leverage the principles of quantum mechanics to process information using qubits that can exist in superposition states, allowing them to perform many calculations simultaneously and offering exponential speedups for certain problems (https://decentcybersecurity.eu/from-classical-to-quantum-bridging-the-divide-between-von-neumann-architecture-and-the-future-of-computing/).

AI and Abstract Understanding

This has led to the claim that computation, as presently constituted, will never be able to abstract or forge new novel connections, categorizations, and symbolic representations necessary to produce novel understandings (Penrose, 1989, 1994). Computers presently lack an algorithm capable of making metacognitive, inquisitive, creative, and spontaneous understandings. Similarly, seemingly automatic functions of human cognition, such as the ability to make analogies and metaphors, are far from automatic in computational systems. They seem to require humans to interpret the data and apply human understanding to the production of new algorithms to support the computational process (Domingos, 2015).

The difference between human and AI information processing is akin to the difference between being able to follow a list of instructions and being able to generate a list of instructions. Though there have been several examples of modern convolutional neural-networking-style computation systems being capable of producing computer programs and even articles for major publications (Keohane, 2017), the capabilities of these programs are all based on relationships discovered by humans and added into the system as a list of instructions for organizing the data they have access to, and not the result of the development of a true understanding.

Neural Networks and Computation

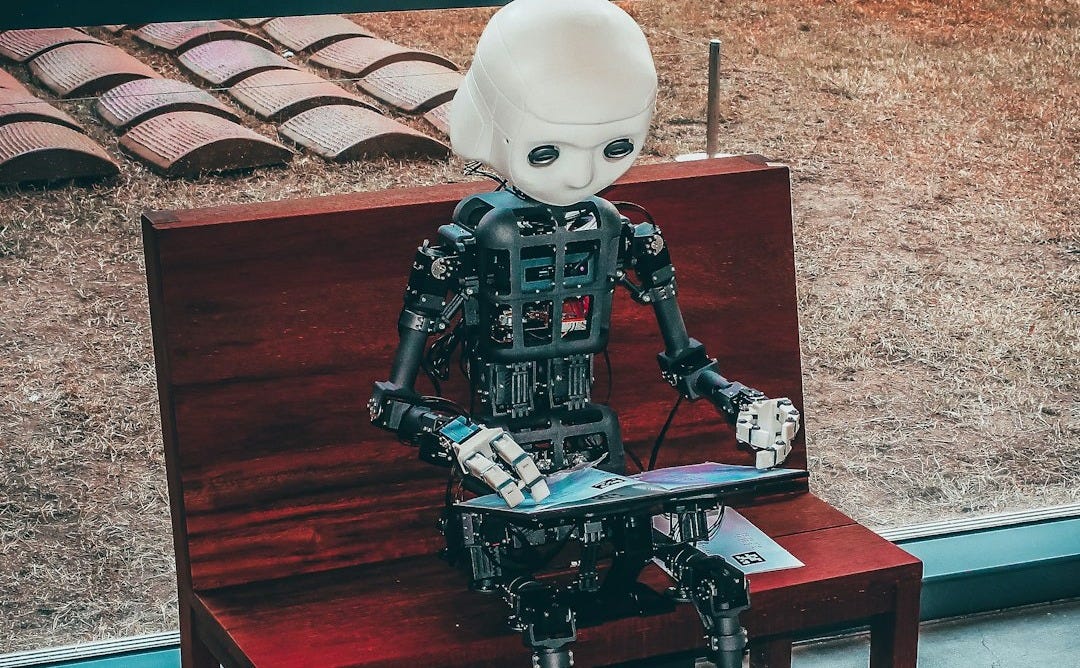

The problem of producing computer intelligence is analogous to an age-old debate in education between teaching discipline and the skills that follow from direction, versus fostering critical thinking and the skills that follow from creativity. While human beings can do both reasonably well, computers seem to be only able to follow directions, albeit in an exceptionally fast and accurate way when compared to humans. Presently, there is wide agreement that it is the integration of neural networks dedicated to specific types of processing that produce the advanced cognitive capabilities of humans (Churchland, 2011; Medina, 2011; Swanson, 2003; Gerhard et al., 2011). This concept best fits with what has been discovered through the various lines of investigation we have covered so far in this discussion.

This approach is further supported by advances in the field of machine learning. The advent of artificial neural networks has led to solutions to several computational problems that were once considered intractable, like image identification, speech recognition, and other general inflexibilities demonstrated by numerical computation (Zhang et al., 1998; Domingos, 2015). Historically, computational learning models have been limited by the linear nature of computational coding, a technological lack of storage, and limited networking capability.

The idea of programming software that uses networks of distributed information to solve problems actually can be traced back to the earliest investigations of machine learning (Domingos, 2015). However, this line of inquiry came to be seen as an investigational dead end due to the limits of computation. To make even simple judgments computationally can require access to massive networks of disparate information that seemed to border on the infinite. However, the last 20 years have seen exponential improvements in computational processing hardware and storage capacity.

As computer hardware has improved, so has the possibility of programming large expansive neural networks capable of accurate problem-solving. Krizhevsky, Sutskever and Hinton (2012) were among the first researchers to demonstrate the power of computational neural networks by using a graphics processing unit to train a computational neural network with five convolutional layers and 60 million parameters on a set of 1.3 million images. Since this time, the use of convolutional neural networks (CNN) has become standard in most search operations, particularly in deep learning and data mining programs that can detect subtle patterns in large data sets (McCann et al., 2017), because of their many convolutional layers of processing.

Recent Advances in CNNs

Recent years have seen a series of exemplary advances in computer vision involving image classification, semantic segmentation, object detection, and image super-resolution reconstruction with the rapid development of deep CNNs, which have superior features for autonomous learning and expression, with feature extraction from original input data realized by training CNN models that match practical applications (https://link.springer.com/article/10.1007/s10462-024-10721-6).

By 2024, CNNs have become an integral part of our digital life, with major milestones including ResNets introducing residual connections that revolutionized deep network training, and the integration of attention mechanisms that have played a pivotal role in tasks such as image captioning, language translation, and image segmentation (https://ubiai.tools/convolutional-neural-networkupdated-2023/).

While newer architectures such as Transformers have dominated both research and practical applications in recent years, CNNs are far from being thoroughly understood and utilized to their full potential, with recent research showing that CNNs can recognize patterns in images with scattered pixels and can analyze complex datasets by transforming them into pseudo images with minimal processing (https://www.nature.com/articles/s41598-024-60709-z).

The power of the CNN model is that it requires relatively minimal preprocessing to produce results (Domingos, 2015). Historically, it was believed that for computers to solve even rudimentary problems all aspects of that problem would need to be hand-engineered, meaning that the programmer would need to consider every possible input into a system, and pre-program each of these possibilities into a coded solution.

The Challenge of Natural Communication

The problem of this computational approach is on display in early, adventure-style computer games like Colossal Cave Adventure (https://rickadams.org/adventure/) where the player can interact with an expansive textual environment. However, gameplay was confounded by the non-exact nature of human communication. Humans can express ideas using a seemingly infinite possible collection of word configurations, whereas computers are limited to only pre-programmed considerations.

Due to this computational limitation, much of the play in these kinds of early computer games revolved around the player trying to decipher which words or phrases the program could actually understand. This was because each entered response needed to exactly match the program’s pre-programmed list of expected responses. If you are unsure of what I am explaining here, please go to the linked website and spend five to ten minutes playing Colossal Cave Adventure (https://rickadams.org/adventure/). I am sure you will quickly realize the limitations of this style of programming for yourself, as you will find yourself spending an inordinate amount of time trying to figure out which text commands the game will actually accept. But it does demonstrate a raw and basic barrier between human and computer intelligence.

Despite tremendous advances in neural networks and deep learning, fundamental challenges remain in bridging the gap between computational pattern recognition and true understanding—between following instructions with remarkable precision and generating genuinely novel conceptual frameworks. The evolution from von Neumann architecture limitations through neural networks to potential quantum solutions represents our ongoing quest to create machines that can truly think, not just compute.